This website presents the paper Human Strategic Steering Improves Performance of Interactive Optimization. Publication Preprint

What is it about?

We wanted to study how users behave when using interactive optimisation system (e.g. recommender systems), where they need to provide some feedback in order to reach some goal.

The main points are:

- Users are not always truthful in their feedback

- Some users steer the AI towards their goal, and hence reach the maximum in fewer iterations

- Users may leak information when having a steering behaviour

Explanatory example

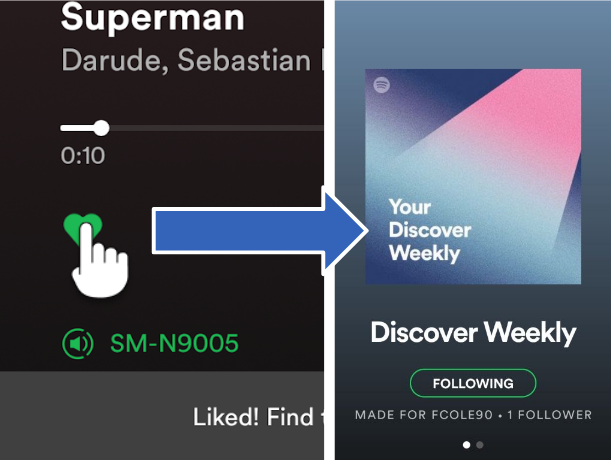

Suppose a user is interacting with a music recommender system like that of Spotify. We would expect the user to be truthful, so to press like for items he or she likes.

However some users may instead try to "lie" in order to reach some goal earlier. In this case the goal could be to get more recommendations of some specific music genre. If the user models the system as recommending songs similarly to the liked ones, he or she might then start giving feedback to song similar to the wanted genre but which the user doesn't actually like. The meaning of the feedback is now no longer just a preference on the item, but also a mean to steer the system towards the user's (and system's as well) goal.

Steering

We here define the concept of steering.

If a user collaborating with an interactive optimisation system

- learns a model of the system

- provides untruthful feedback with a strategy aimed at reaching the goal earlier

We say that the user is trying to steer the system towards their goal.

System implementation

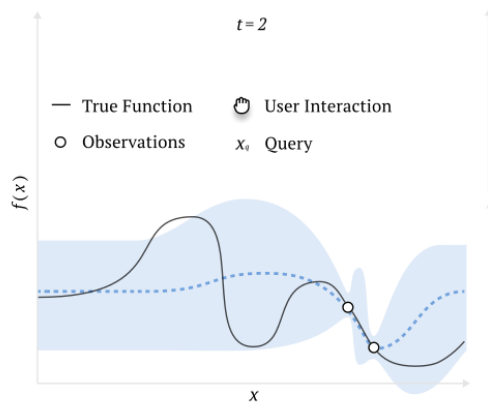

In order to study this behaviour in the most simple yet flexible way, we implemented our system as an interactive 1D Bayesian Optimisation using a Gaussian kernel and Upper Confidence Bound as acquisition function.

- AI cannot see the function, so it tries to find the maximum by asking the user the height of some query point x;

- User can see function, and provides some yu for each point x asked by AI

- User’s yu may differ from f(x)

Results

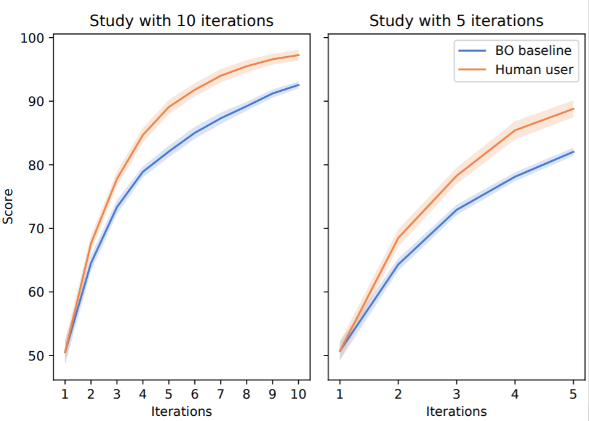

- Steering users perform statistically significantly better than baseline

t(599) = 4.1, p < 0.001 - Steering users’ scores increased faster than the baseline

t(596) = 2.2, p = 0.031 - Next generation intelligent systems could exploit such steering behavior to further improve performance

- Exploiting steering requires user models capable of anticipating such behaviour

Conclusions

- Users strategically steer intelligent systems to control them

- Steering users can achieve performance improvements

- Next generation intelligent systems could exploit such steering behavior to further improve performance

- Exploiting steering requires user models capable of anticipating such behaviour

Abstract

A central concern in an interactive intelligent system is optimization of its actions, to be maximally helpful to its human user. In recommender systems for instance, the action is to choose what to recommend, and the optimization task is to recommend items the user prefers. The optimization is done based on earlier user's feedback (e.g. "likes" and "dislikes"), and the algorithms assume the feedback to be faithful. That is, when the user clicks “like,” they actually prefer the item. We argue that this fundamental assumption can be extensively violated by human users, who are not passive feedback sources. Instead, they are in control, actively steering the system towards their goal. To verify this hypothesis, that humans steer and are able to improve performance by steering, we designed a function optimization task where a human and an optimization algorithm collaborate to find the maximum of a 1-dimensional function. At each iteration, the optimization algorithm queries the user for the value of a hidden function f at a point x, and the user, who sees the hidden function, provides an answer about f(x). Our study on 21 participants shows that users who understand how the optimization works, strategically provide biased answers (answers not equal to f(x)), which results in the algorithm finding the optimum significantly faster. Our work highlights that next-generation intelligent systems will need user models capable of helping users who steer systems to pursue their goals.

Reference

Fabio Colella, Pedram Daee, Jussi Jokinen, Antti Oulasvirta, Samuel Kaski

Human Strategic Steering Improves Performance of Interactive Optimization

UMAP 2020

DOI: doi.org/10.1145/3340631.3394883

Contact

Each author: name.surname@aalto.fi

Work done in the Probabilistic Machine Learning research group at Aalto University. The research group is a part of Finnish Center for Artificial Intelligence (FCAI) and Helsinki Institute for Information Technology (HIIT).

Acknowledgments

We thank Mustafa Mert Çelikok, Tomi Peltola, Antti Keurulainen, Petrus Mikkola, Kashyap Todi for helpful discussions. This work was supported by the Academy of Finland (Flagship programme: Finnish Center for Artificial Intelligence, FCAI; grants 310947, 319264, 292334, 313195; project BAD: grant 318559). AO was additionally supported by HumaneAI (761758) and the European Research Council StG project COMPUTED. We acknowledge the computational resources provided by the Aalto Science-IT Project.