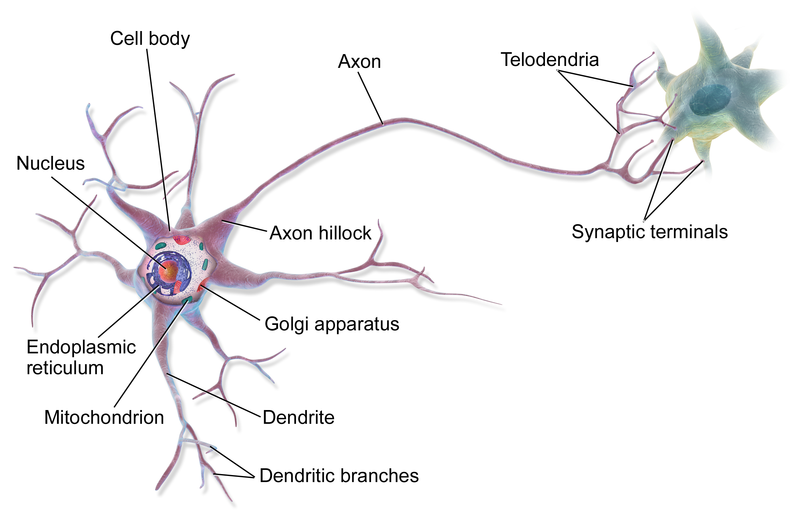

The neurons are the cells responsible for the transmission of the signal through the human nervous system. A single neuron cannot achieve much by itself, but groups of them can create highly complex networks, with billions of connections. Neural networks can be considered the analogue of what processors are for computers: a powerful calculation machine.

Contents at a glance:

- Real neural networks and Artificial Neural Networks

- The abilities and the limits of the current Artificial Neural Networks models

Scheme visualisation of a mulipolar neuron.

Scheme visualisation of a mulipolar neuron.

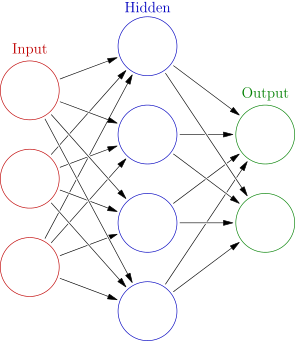

Artificial Neural Networks (ANN) are mathematical models which mimic the functioning of human neural networks, and it is thanks to them that today we are experiencing a new spring in the artificial intelligence research field. The artificial neuron is represented as simply as a summation unit, which makes a weighted sum of the inputs of its afferent neurons. The entity of its output is related to the value of the inputs sum and to the activation function.

An artificial neural network is an interconnected group of nodes, akin to the vast network of neurons in a brain. Here, each circular node represents an artificial neuron and an arrow represents a connection from the output of one neuron to the input of another. – Wikipedia

An artificial neural network is an interconnected group of nodes, akin to the vast network of neurons in a brain. Here, each circular node represents an artificial neuron and an arrow represents a connection from the output of one neuron to the input of another. – Wikipedia

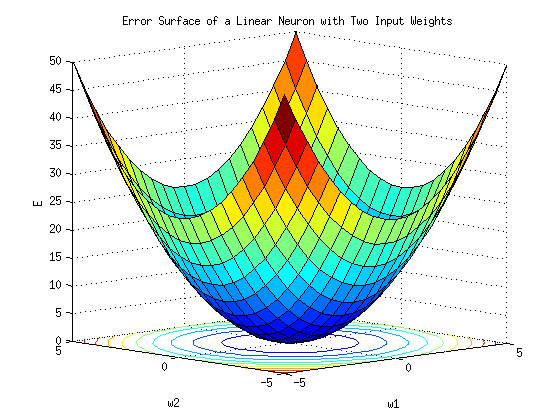

What makes neurons especially powerful, is their ability to learn. This has been discovered to be determined by the establishment, the strengthening or loosening of connections between neurons. In the model of artificial neural networks such a mechanism has been mimicked leveraging the values of the weights which represent the connections. As such, the lower (or higher) the value the loosen (or stronger) the connection will be.

Error surface of a linear neuron with two input weights. - By AI456 - Graphed with MatLab - This graphic was created with MATLAB, CC BY-SA 3.0

Error surface of a linear neuron with two input weights. - By AI456 - Graphed with MatLab - This graphic was created with MATLAB, CC BY-SA 3.0

The algorithm responsible for this learning operations, in the ANN, is the backpropagation. It’s a technique which uses derivatives to find the connection pattern which minimises the error given a certain task. It was discovered in the 80s, but only recently computers have become powerful enough to compute it in complex multilayered networks. This lead to the deep learning revolution that we are experiencing today, and to very complex artificial intelligences, which can beat the strongest human players at Go, drive cars and become everyone’s personal assistant.

Geoffrey Hinton giving a lecture about deep neural networks at the University of British Columbia. He was one of the first researchers who demonstrated the use of generalized backpropagation algorithm for training multi-layer neural nets and is an important figure in the deep learning community. - By Eviatar Bach - Own work, CC BY-SA 3.0

Geoffrey Hinton giving a lecture about deep neural networks at the University of British Columbia. He was one of the first researchers who demonstrated the use of generalized backpropagation algorithm for training multi-layer neural nets and is an important figure in the deep learning community. - By Eviatar Bach - Own work, CC BY-SA 3.0

However ANNs, as they’re currently modelled, require a very hard and long training before they can do all their marvelous things, which is the reason behind the immense growth of value of large collections of data, usually known as Big Data. This limits upset even one or the pioneers of the backpropagation algorithm, Geoffrey Hinton, who recently suggested to get rid of the current learning method, to try to discover something totally new. Clearly backpropagation cannot be trashed from one day to another, but the development of new means for modelling learning is a major interest of the artificial intelligence researchers.

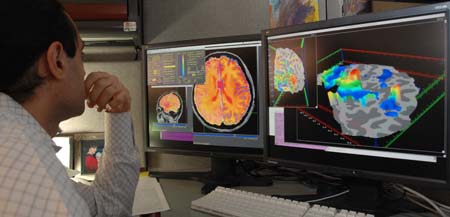

Researcher checking fMRI images - By NIMH - US Department of Health and Human Services: National Institute of Mental Health, Public Domain

Researcher checking fMRI images - By NIMH - US Department of Health and Human Services: National Institute of Mental Health, Public Domain

The way to discover more powerful techniques to mimic learning is long and perilous but new discoveries in neuroscience may unravel and lead to new and unexpected fields. Studying the behaviour and inner working of the brain can open paths to new state of the art algorithms and techniques for machine learning.

By Fabio Colella and Michele Vantini

Cross posted on: BRAIN-log